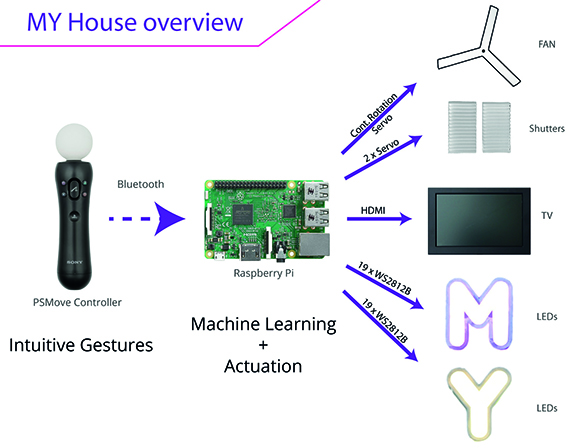

When a master’s degree course at the University of Washington required the use of sensors and machine learning in the same project, two students – Maks Surguy and Yi Fan Yin – conceived the idea of an interactive doll’s house. Inside this cool crib, various features – including lighting and shutters – can all be turned on and off by the simple wave of a ‘wand’ (a PlayStation Move controller), with the help of some clever coding and a Raspberry Pi 3.

“I thought a smart doll’s house would be a great tool to demonstrate technical innovations to people in an approachable way,” says Maks, who worked with Yi Fan over a ten-week period, designing and constructing the clever little doll’s domicile.

After consulting Maks’s architect wife about the physical structure, the pair drew the plans in 3D modelling software, then fitted together cardboard pieces for a prototype. Once happy with the design, they laser-cut the pieces out of plywood, made use of snap‑fit to join them, then painted them in different colours.

According to Maks, building a doll’s house is akin to building a real house. “Lots of decisions needed to be made about dimensions, colours, structure, function, and interactions between all elements of the dollhouse. We ended up simplifying a lot of the elements through iterative process after realising that what we envisioned is actually a lot harder than it seems. Thankfully we had 24/7 access to a makerspace here in school and were able to reach decisions through prototyping every aspect of the construction.”

MyHouse: gesture recognition and response

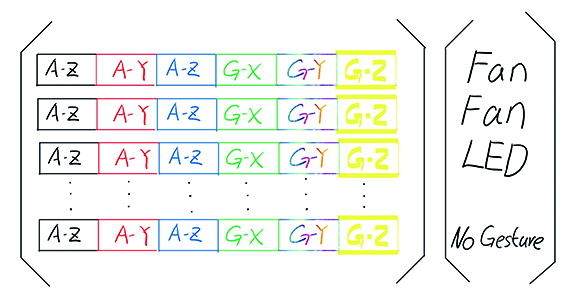

A key characteristic of this smart doll’s house is its ability to recognise gestures and respond accordingly. Having done a great deal of research into gesture recognition, “trial and error went into choosing what gestures perform best across individuals while remaining intuitive to most people,” says Maks. “We read a lot of research papers on gesture recognition and then came up with our own gestures that worked with over 90 percent accuracy.”

In total, seven gestures – pre‑trained using machine learning – are stored in the system, and the Raspberry Pi reads the information from the PlayStation Move and then determines if the gesture is similar to one of the stored ones. As Maks explains, if the gesture is recognised, “various functional items in the dollhouse can be activated or deactivated using these pre-trained gestures: TV, lights, fan, and shutters.”

The machine learning aspect of the project also presented certain challenges, as Maks recalls: “We ran into trouble selecting the most intuitive gestures and had to do quite a bit of trial and error to refine the experience. It takes about 20 samples per gesture to train the neural network, which is doable in a matter of a few minutes.”

Using MyHouse to teach Raspberry Pi and computing

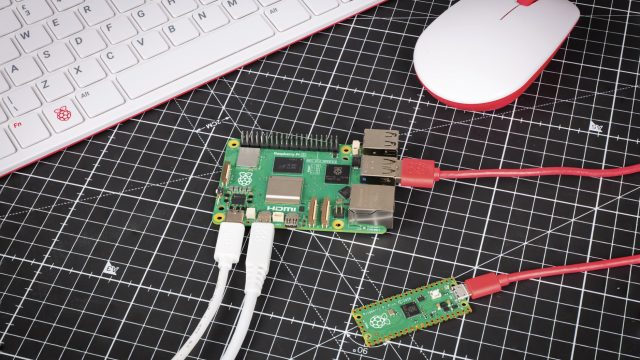

Maks was keen to use a Raspberry Pi in this project as he is enthusiastic about the possibilities it presents: “I am interested in pushing the Pi to its limits.” He also has plans for the future, currently working with the Processing Foundation as a part of Google Summer of Code initiative to reduce barriers in using the Processing language on the Raspberry Pi. “My plan is to create a comprehensive resource that teaches people of all ages how to use the Raspberry Pi and Processing together, taking advantage of all connectivity and interactivity available on the Pi.”

If the idea of an interactive doll’s house appeals, the open-source nature of the code that Maks has created means that this is a build that anyone can attempt, as long as they possess some coding skills and the necessary components. “We haven’t released the building plans for the dollhouse yet but if somebody’s interested, I can share those as well,” says Maks.

Step-01: Plywood structure

After constructing a cardboard prototype, the doll’s house was built from laser-cut plywood pieces which snap together.

Step-02: NeoPixel letters

The laser-cut M and Y letters are each fitted with a strip of NeoPixel LEDs. Servos are used to rotate the ceiling fan and open the shutters.

Step-03: Gesture training

Maks and Yi Fan researched the most intuitive gestures to use. Each gesture was trained using a neural network, which involved taking around 20 samples.